Your solar model is wrong — but it can still be useful

Industry analysis shows solar portfolios are underperforming by 6% on average, risking $100 million per GW in lost value. By modeling both weather variability and systematic performance biases, developers can improve energy predictions, financing terms, and long-term project success.

By Chetan Chaudhari and Mark Campanelli | As statistician George Box observed, “all models are wrong, some are useful.” The solar industry’s challenge is to ensure our models fall into the useful category.

Solar portfolios have been in the news and not so favorably. Recent industry reports from Wells Fargo and kWh Analytics estimate that solar portfolios are underperforming by 6% or more on average, leaving approximately $100 million per gigawatt of value at risk. That’s not a rounding error. For a typical utility-scale developer with a 1 GW pipeline, it’s enough lost value to pursue several additional projects.

There are some well-known suspects: curtailment events when the grid cannot accept power during peak generation, and availability issues when an inverter fails or a hailstorm wipes out an array. But what if our expected generation is also fundamentally off base?

Portfolios are often geographically diverse, so weather variability should average out over time. A few outlier projects may underperform in some of the years, but shouldn’t they meet the expectations overall? And if so, why is the industry seeing a 6% shortfall on average?

Solar projects are like a daily commute

Consider your daily commute: some days traffic is heavier than others — that’s natural variability. But if you consistently underestimate travel time because you forget about that road closure, that’s systematic bias. Both affect when you arrive at work, but while traffic variability averages out over time, systematic bias persists. You’ll be late every single day until you either account for it or change your route (or the construction ends, which somehow always seems to take longer than indicated).

Solar projects face similar challenges related to shortfalls. Some years will be cloudier than others but the net impact of weather over time averages out. However, PV modules degrade unevenly, sensors in weather stations and satellites have calibration tolerances, trackers only approximately follow the sun, and soiling patterns vary. All of these factors — and many more — create systematic uncertainties that can introduce performance bias (usually reducing generation) that typically persists over a project’s 20 to 30 year operating life.

The difference between these two uncertainty types determines whether underperformance is an unfortunate surprise that’s recoverable or a persistently biased outcome that we failed to reasonably anticipate.

Change what you can, account for what you can’t

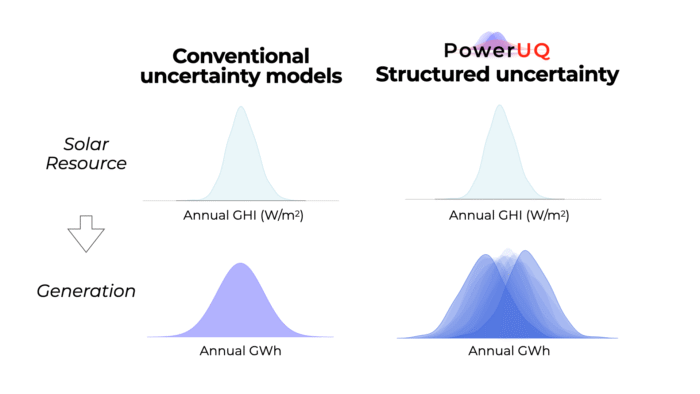

Conventional energy modeling tools treat energy generation as a deterministic process. They input a single “representative” resource-year data, assume equipment performs exactly as specified on datasheets, and thus output annual energy estimates with a false sense of accuracy and unknown precision. This approach overlooks the reality that weather variability is distinct from system-performance uncertainty. Both uncertainties should be separately quantified and communicated to stakeholders to fully inform risk-accounted financial decisions.

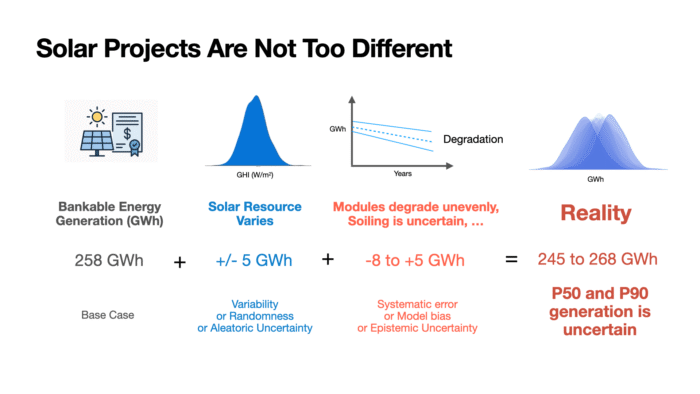

For example, consider a typical 100 MW PV power plant. A conventional model might predict that if the median annual insolation (i.e., a P50 resource year) at a site is 2,000 kWh/m², the plant will produce 258 GWh of electricity in that year. In a particularly cloudy year (i.e., a P90 resource year), the model might reduce insolation to 1,800 kWh/m², estimating 253 GWh of generation.

But real-world performance is rarely so neat. Even in an average weather year, actual generation may fall short (closer to 250 GW) because the satellite-weather data consistently overestimated irradiance by a few percentage points, or because some modules degraded at 0.6% per year instead of 0.5%, or because uneven cooling caused cell temperatures to diverge. These are systematic biases that don’t “average out.”

Now imagine the same for a P90 year. The combination of a weather-related shortfall and unmodeled system biases could push total generation down to 245 GWh — a double hit that’s rarely accounted for when the only uncertainty considered is weather variability.

The good news? Some of this shortfall can be mitigated. By identifying and addressing the “road closures” in our energy modeling, such as calibrating irradiance sensors at the project site, or testing PV modules through independent labs, we can reduce systematic biases, or at the very least, reasonably guard against them. We can’t eliminate model uncertainty altogether, but we can account for it in our P50 and P90 estimates and integrate it into the quality control of our modeling processes.

When they say it’s not about money …

Solar projects represent a sophisticated balance of stakeholder interests. Project owners seek confidence that projects will generate enough energy on average over the project life to meet their target returns (P50 scenario). Lenders want assurance that even in a bad weather year (P90 or P99 scenario), projects will generate sufficient income after expenses to cover its debt obligations.

If energy estimates repeatedly miss the mark, projects are perceived as higher-risk investments. Lenders require either higher owner contributions (equivalent to a bigger downpayment on a house) or a higher interest rate to compensate for this risk. This reduced leverage reduces project profitability and could dissuade future investments in solar-powered energy infrastructure.

Ultimately, project success depends not just on total energy generation but on the credibility of predictions so the project gets financed. When models consistently underestimate risk, the entire industry pays through higher financing costs and reduced investment.

The path forward also involves building feedback loops between operating projects and improving future models. As projects generate real performance data, we can identify systematic biases and improve our expectations. This isn’t about achieving perfect predictions because that’s impossible. It’s about building models useful and credible enough to support the decisions we’re asking them to inform.

Chetan Chaudhari and Mark Campanelli, Ph.D. are co-founders of PowerUQ, the solar industry’s first dedicated software platform for uncertainty quantification. Chetan has over 16 years of experience developing techno-economic energy modeling and data science tools for the renewable energy sector, including SunPower’s fleet of over half a million projects. An applied mathematician at heart and a software engineer by trade, Mark is a recognized practitioner in uncertainty quantification, with prior research roles at NREL and NIST.

Comments are closed here.